- About TAI

- Services

-

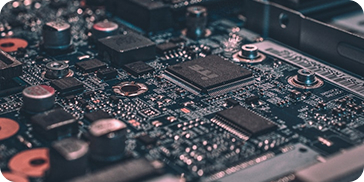

IT Services in Nepal for Scalable Digital Growth

Business Services to Accelerate Digital Transformation

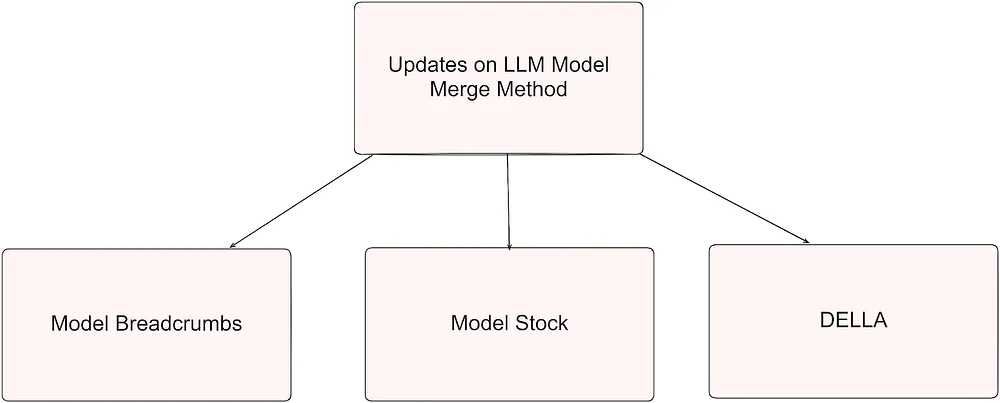

Featured Article

The Evolution of IT Technology: Transforming the World Around Us

14 Feb. 2025

-

- Case Studies

- Blog

- Inquire Us

EN